The SAG-AFTRA union says “progress has been made” in negotiations over the videogame voice and movement seize actors strike that was known as in July 2024, however warned that the latest proposal submitted by the bargaining group is “stuffed with alarming loopholes that can depart our members susceptible to AI abuse.”

The strike, which formally started on July 26, 2024, requires “truthful compensation and the proper of knowledgeable consent for the AI use of [actors’] faces, voices, and our bodies,” SAG-AFTRA nationwide govt director Duncan Crabtree-Ireland stated on the time. The sport makers negotiating with the union stated they’ve provided “significant AI protections that embrace requiring consent and truthful compensation,” however the union feels in any other case, claiming sport firms concerned within the negotiations “refuse to plainly affirm, in clear and enforceable language, that they’ll shield all performers coated by this contract of their AI language.”

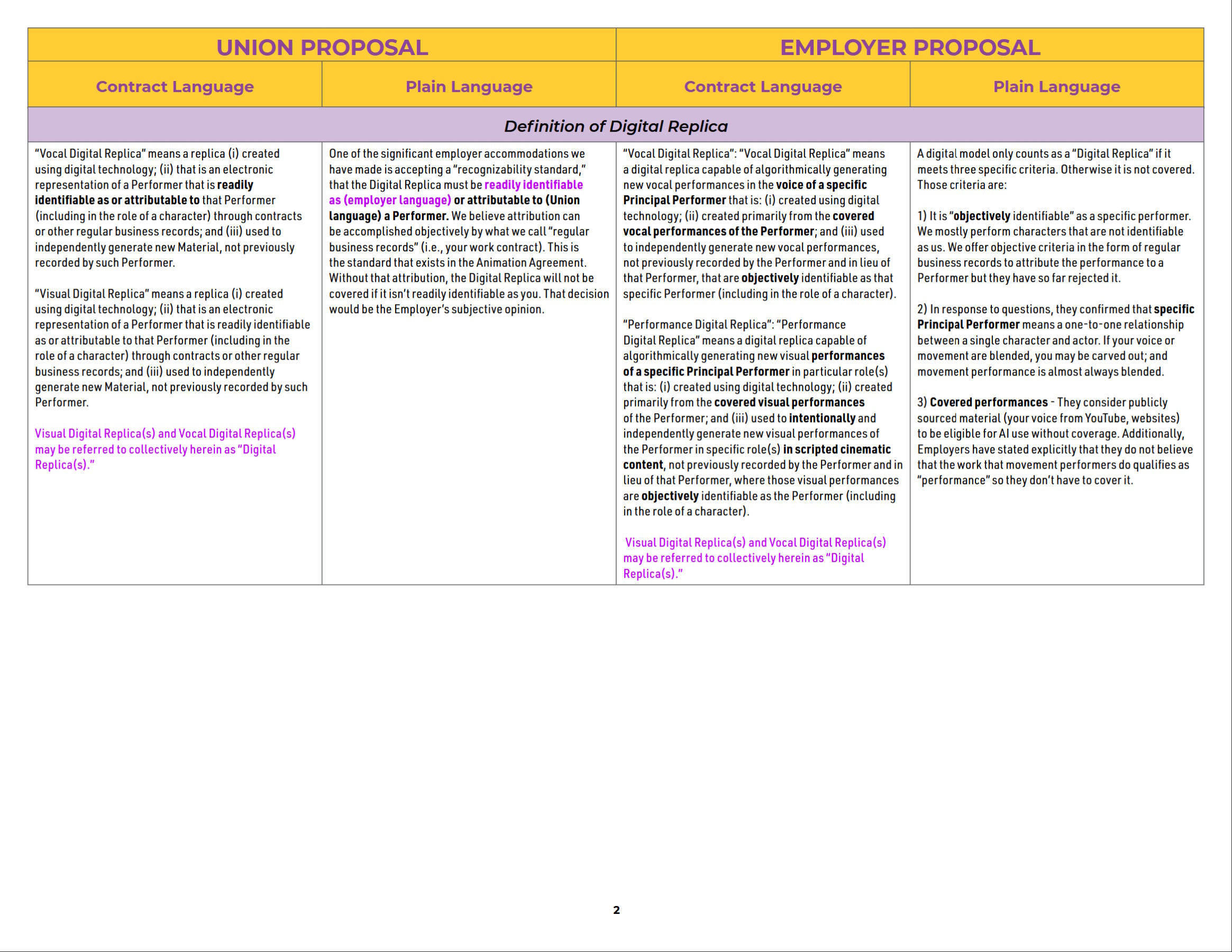

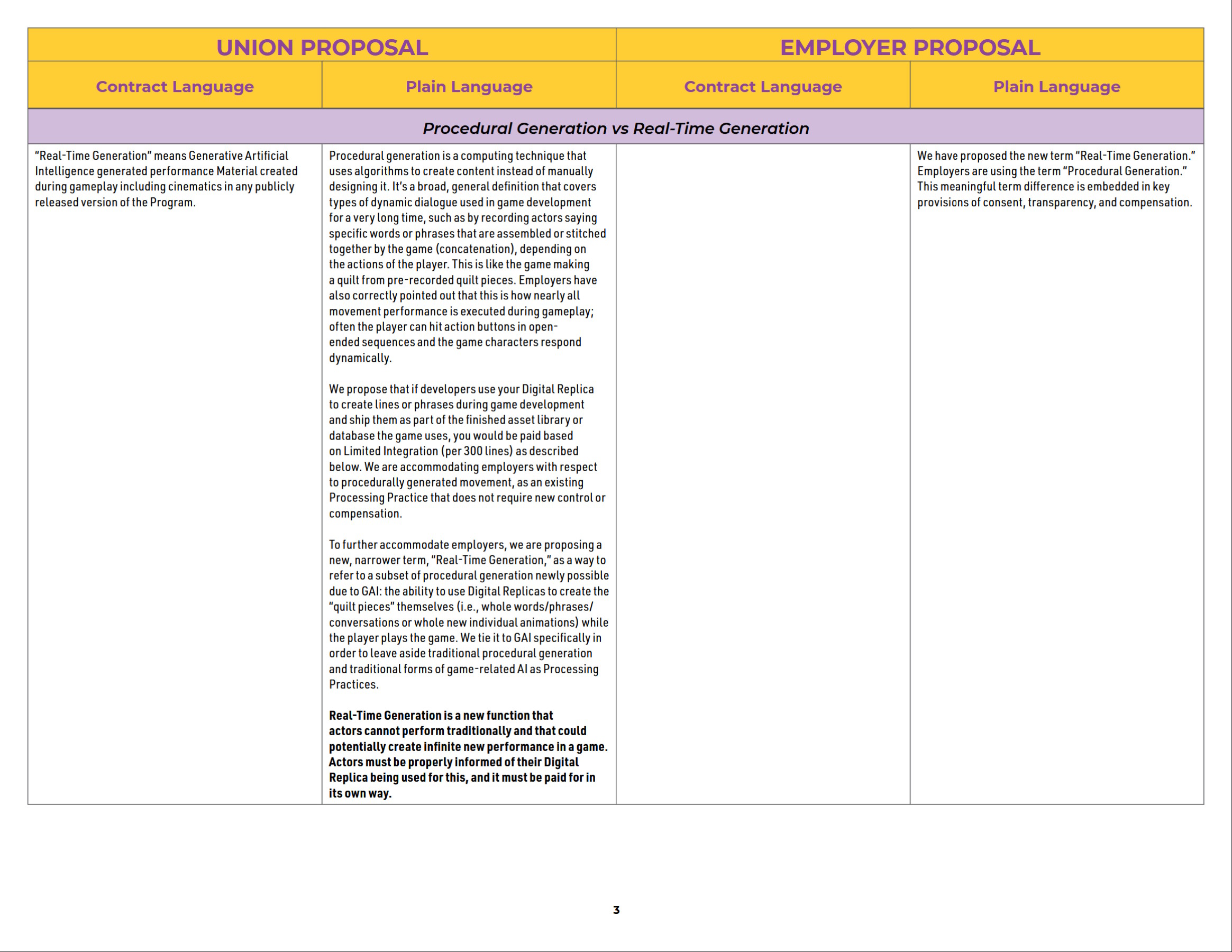

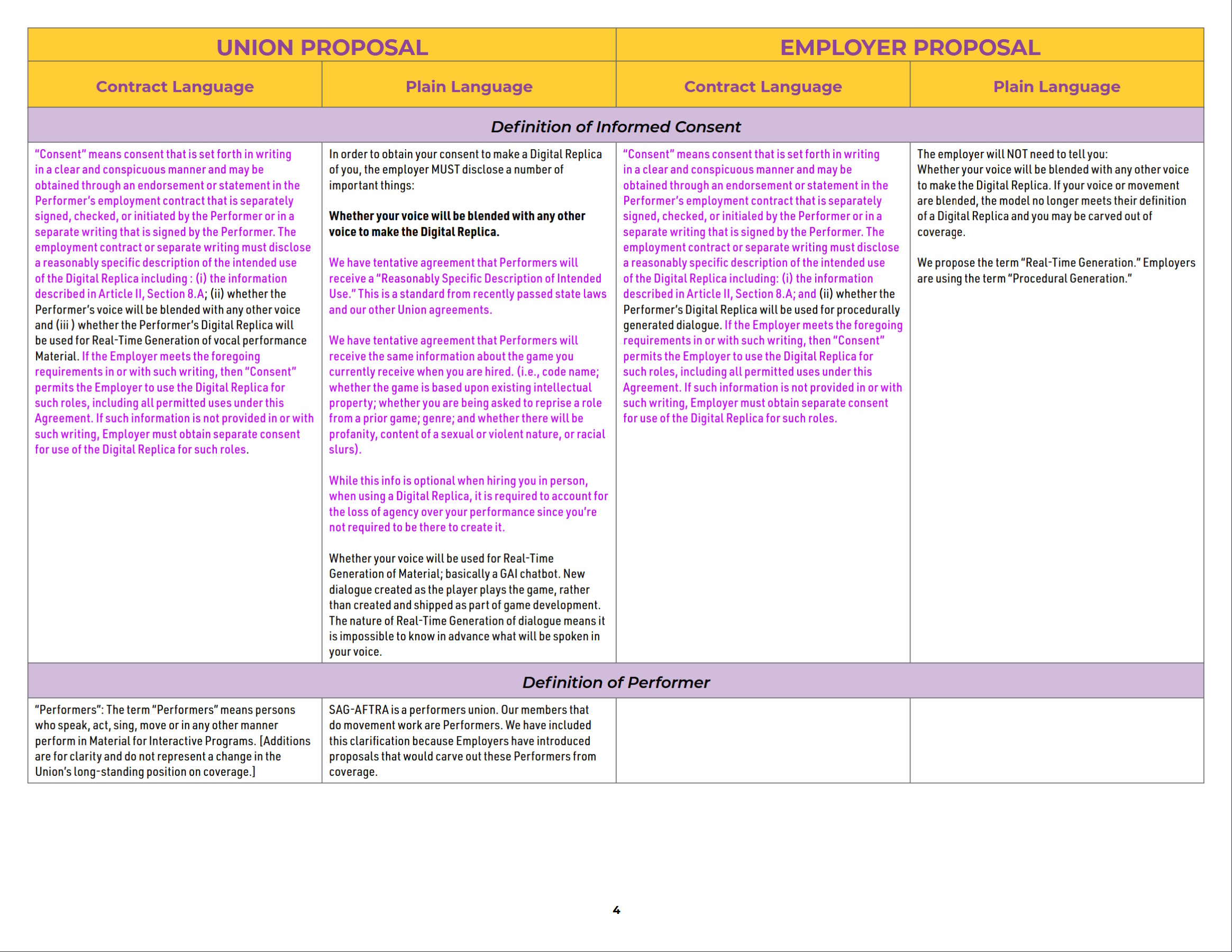

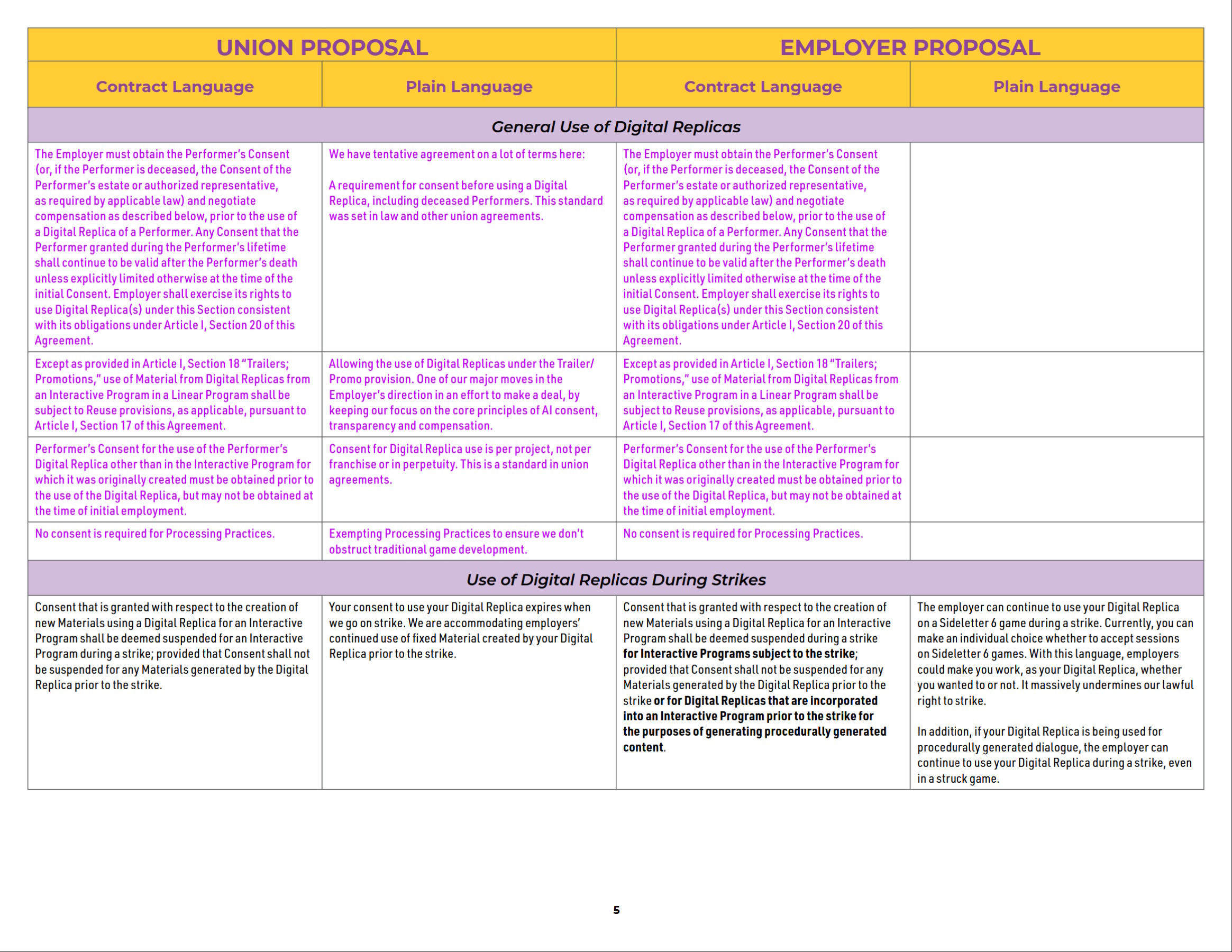

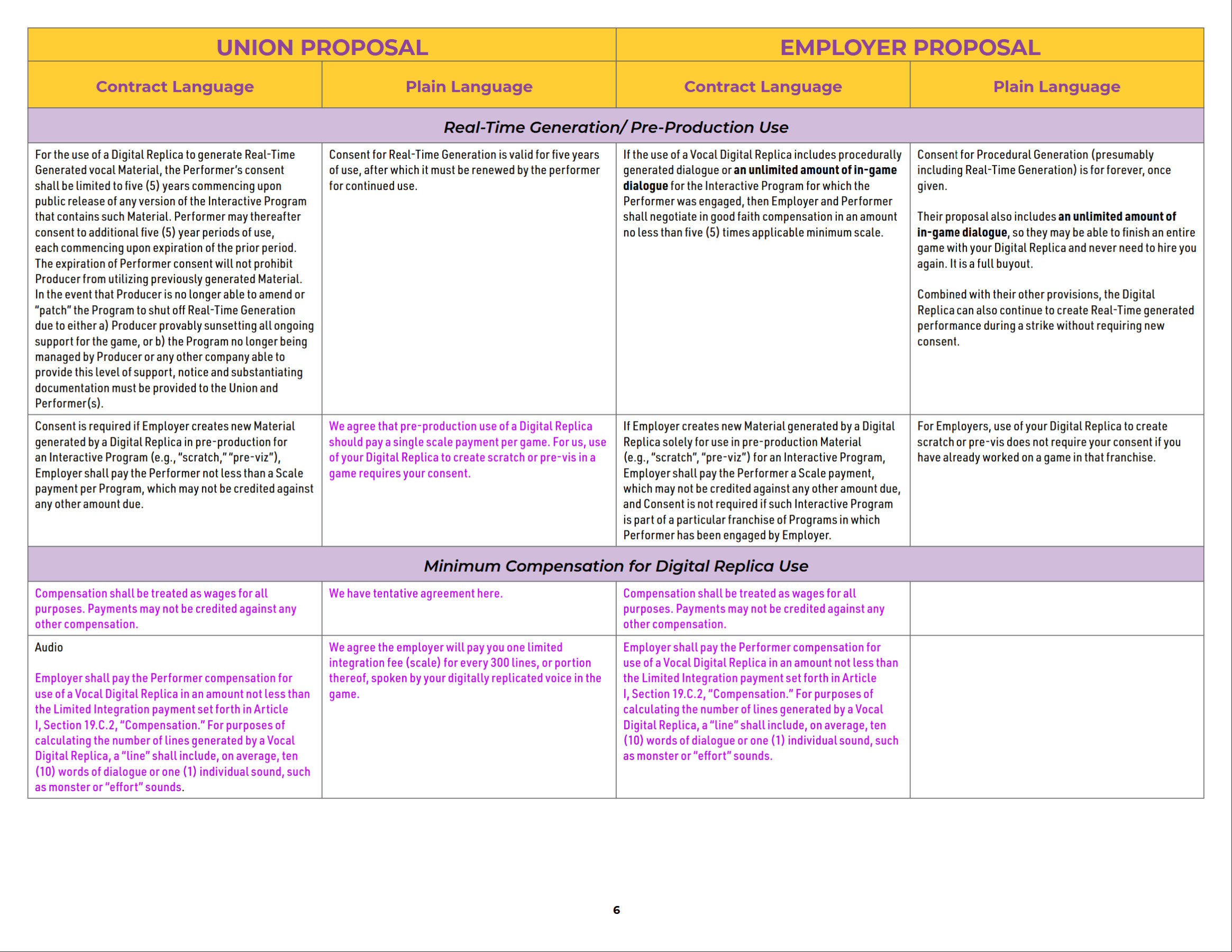

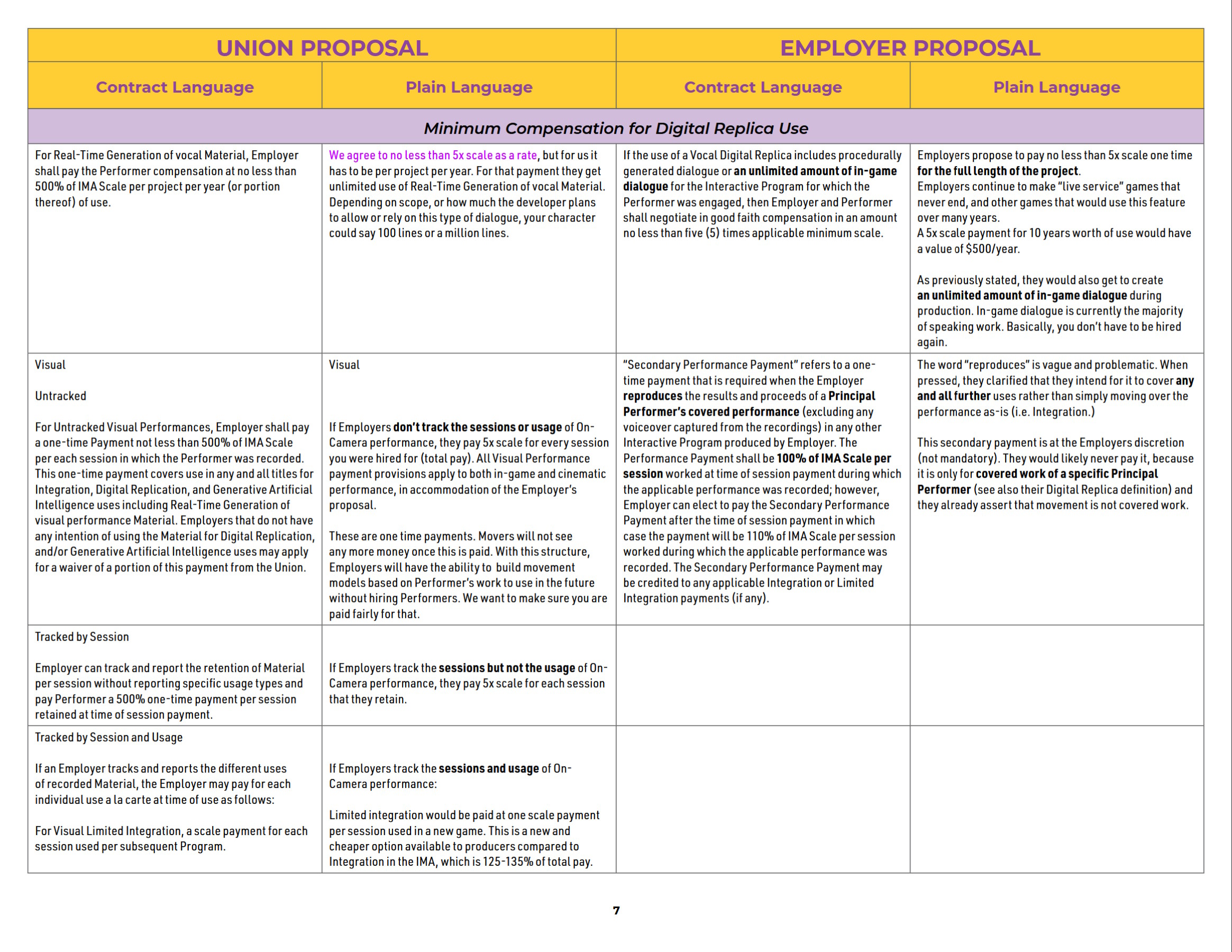

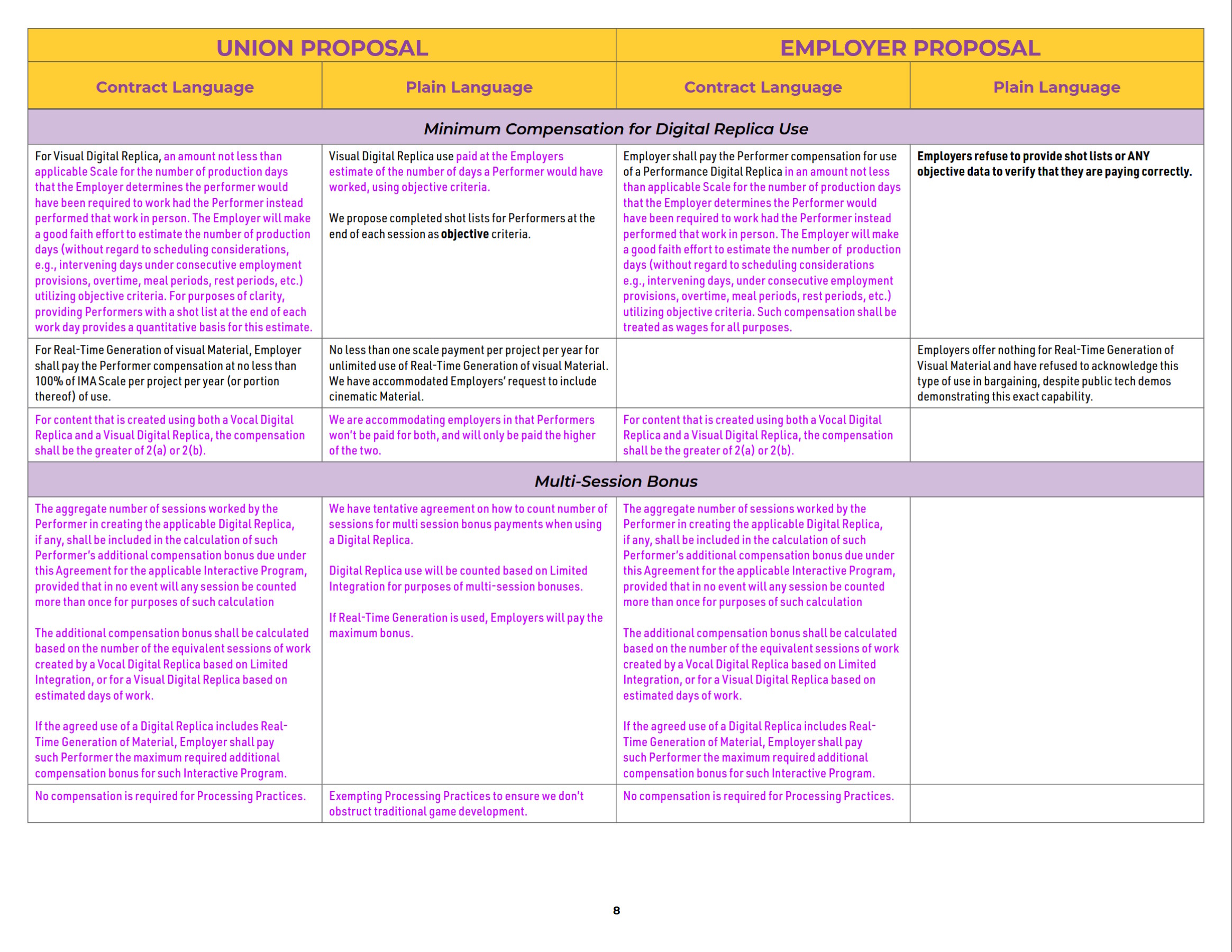

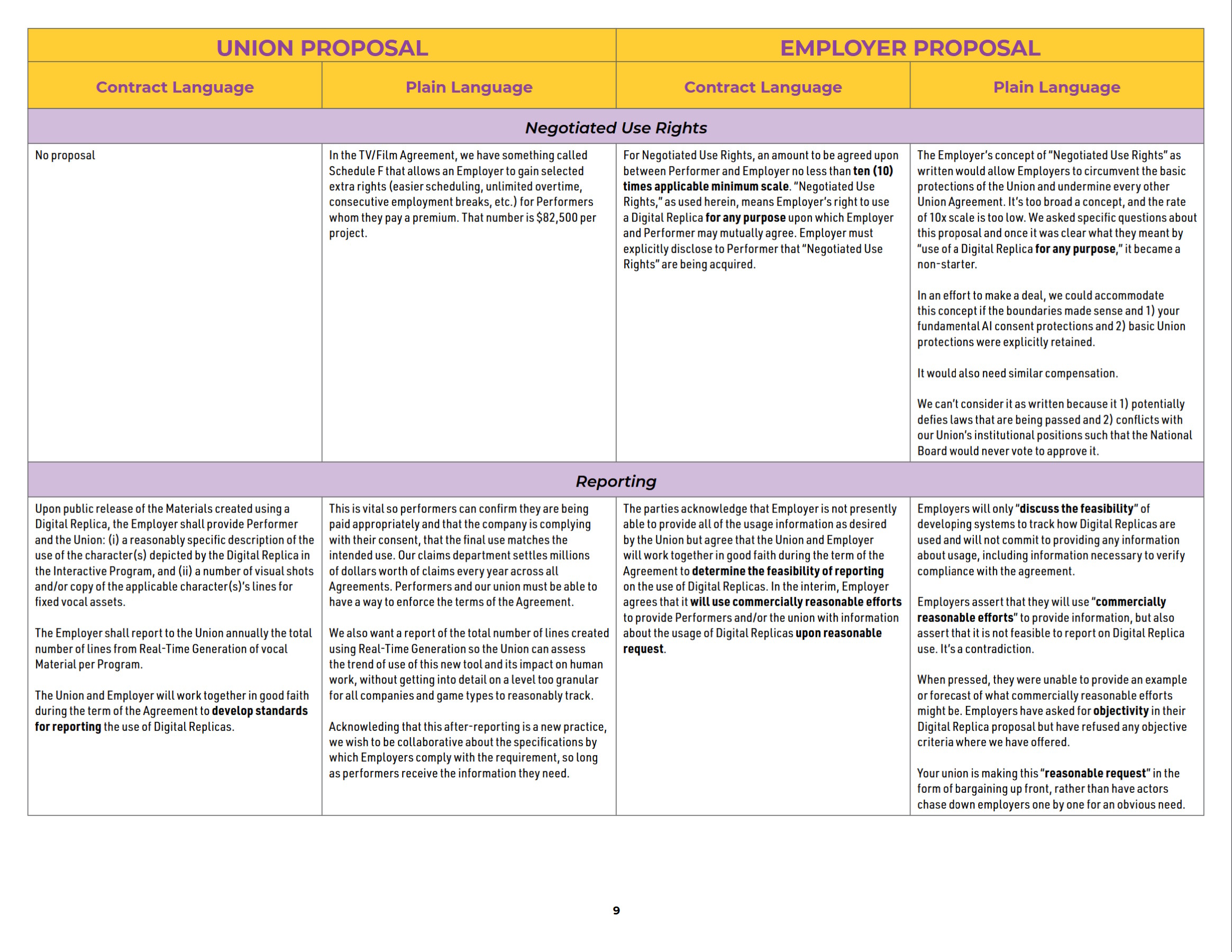

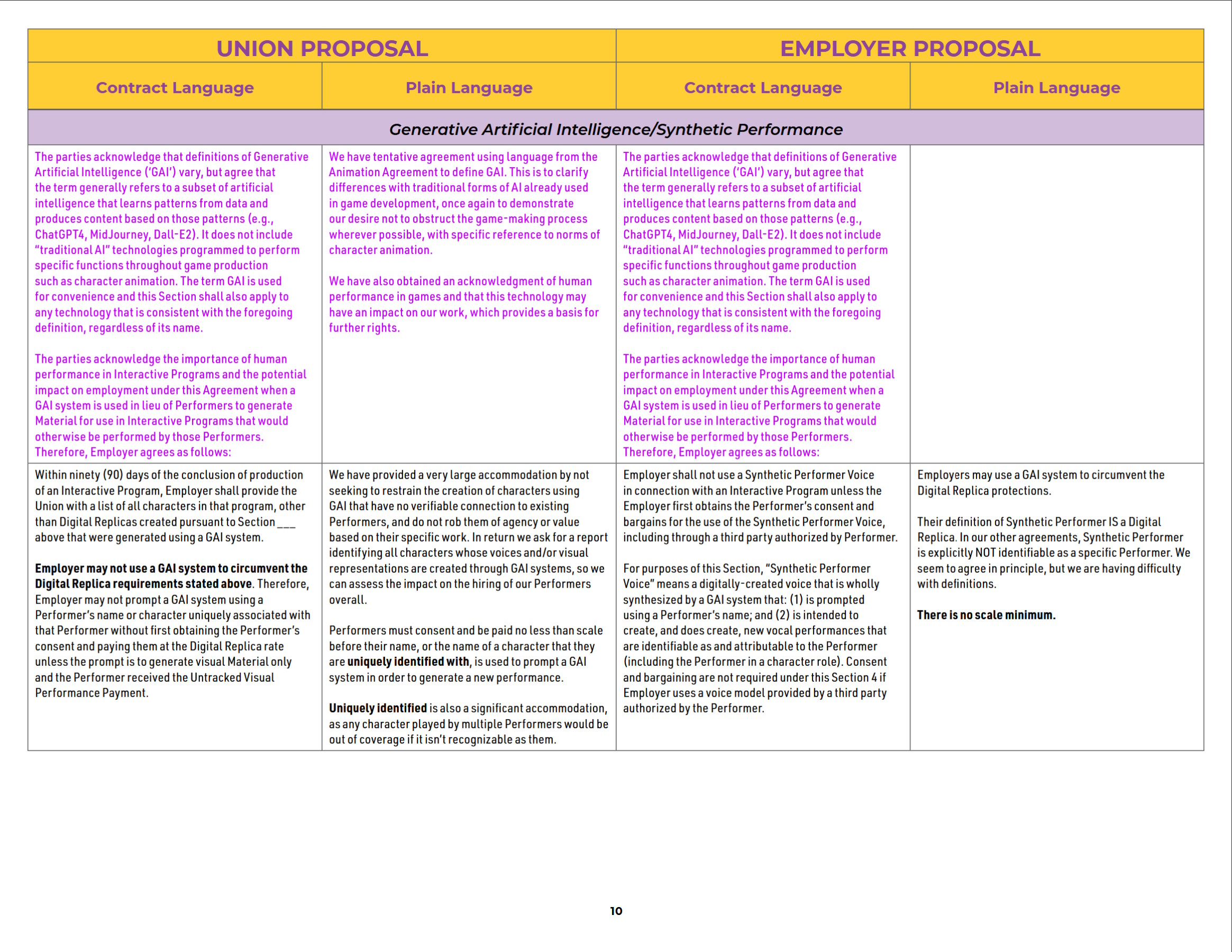

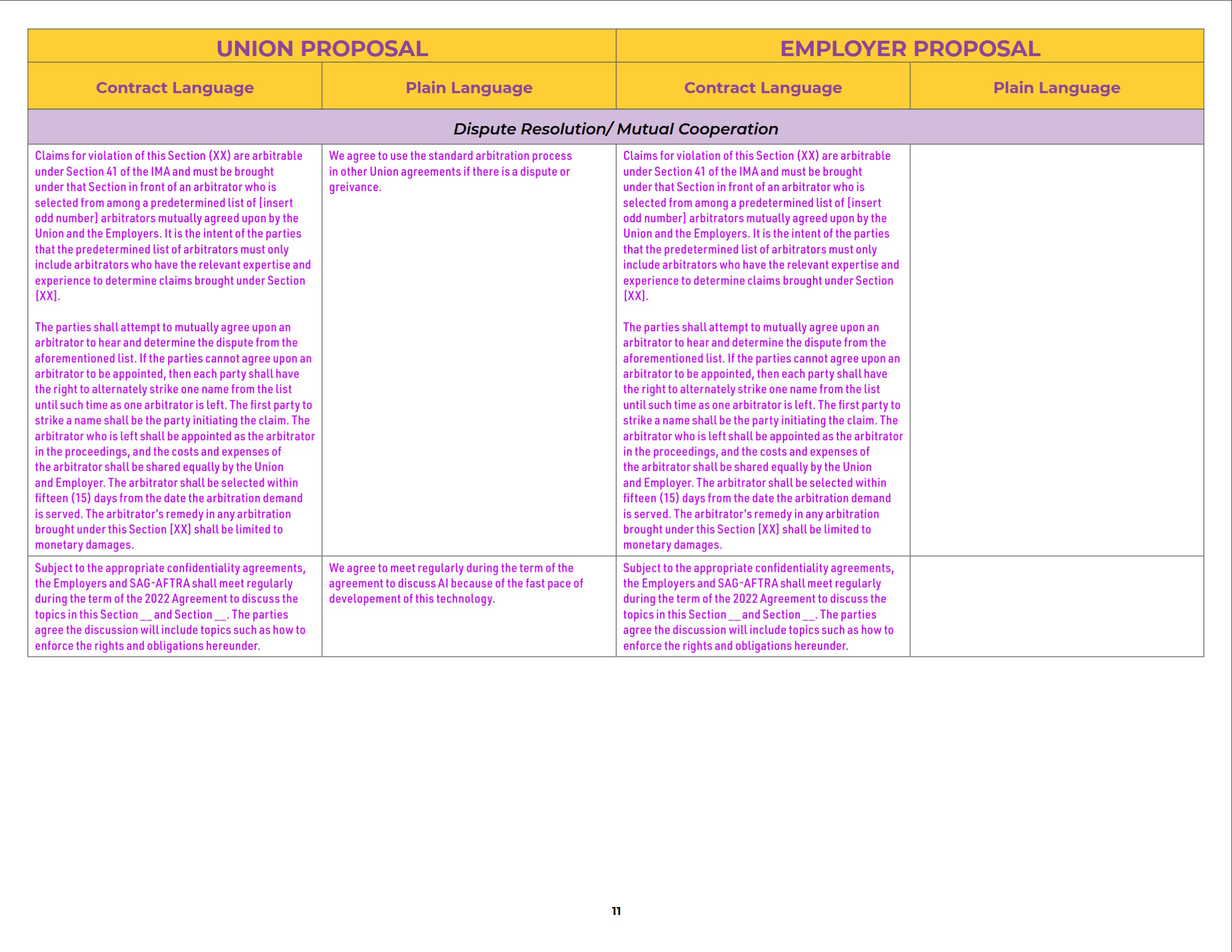

That clearly continues to be the case. SAG-AFTRA stated in a brand new assertion that the bargaining group representing sport firms “would have you ever consider that we’re near reaching a deal,” however insisted that is not really the case. It additionally shared a chart of proposals to display “how far aside we stay on elementary A.I. protections for all performers.”

“They need to use all previous performances and any efficiency they’ll supply from exterior the contract with none of the protections being bargained in any respect,” SAG-AFTRA stated.

“You may very well be informed nothing about your duplicate getting used, provided nothing in the best way of cost, and you may do nothing about it. They need to have the ability to make your duplicate proceed to work, as you, throughout a future strike, whether or not you prefer it or not. And when you’ve given your particular consent for a way your duplicate can be utilized, they refuse to inform you what they really did with it.”

The rise of AI is a serious concern for voice and mocap actors within the sport enterprise, and rightly so. If sport builders can scale back their budgets by utilizing machine-generated voices somewhat than these of actual individuals, it is a secure guess that they’ll. Whether generative AI can match a real human efficiency is a matter of debate, however there isn’t any query that the expertise is getting higher, and eventually the distinction could turn into indistinguishable—or if it may be distinguished, a good portion of the gaming viewers merely could not care.

We’re not fairly at that time but, because the sturdy damaging response to Sony’s lately leaked work on an AI-powered Aloy makes clear. But that experimentation additionally makes clear that sport makers are pursuing this type of work, and whereas the very unnatural voice of that chatbot might be simply handwaved as a placeholder, it is work towards a aim, and people techniques will get higher.

SAG-AFTRA warned that sport firms are hoping union members “will activate one another” because the strike drags out, however the sport makers themselves are dealing with rising stress: League of Legends studio Riot Games was lately compelled to recycle outdated voiceovers for a few of its English-language skins as a result of strike, and extra lately Bungie stated Destiny 2’s Heresy episode launched with some voice traces lacking.

In February, greater than 30 members of Apex Legends’ French solid—not members of SAG-AFTRA, however you already know France and its unions—reportedly refused to signal a brand new contract as a result of it required them to permit their work for use to coach generative AI techniques.

“With their beforehand signed initiatives dragging their approach by the manufacturing pipeline, employers are feeling the squeeze from the strike, as SAG-AFTRA members who work in video video games proceed to face collectively and refuse to work with out enough protections,” the union wrote. “This is inflicting employers to hunt different performers they’ll exploit to fill these roles, together with those that don’t sometimes carry out in video games.

“If you’re approached for such a job, we urge you to significantly contemplate the implications. Not solely would you be undermining the efforts of your fellow members, however you’d be placing your self in danger by working with out protections in opposition to AI misuse. And ‘AI misuse’ is only a good approach of claiming that these firms need to use your efficiency to switch you—with out consent or compensation.”